Markov chain Monte Carlo (MCMC) methods are a class of algorithms for sampling from a probability distribution based on constructing a Markov chain that has the desired distribution as its equilibrium distribution. The Metropolis-Hastings (MH) algorithm is one of the best known of these methods, which was developed by Metropolis et al.1 and subsequently generalized by Hastings.2 Firstly, MH algorithm has been used in physics and was little known to statisticians until Muller3 and Tierney4 provided important applications using this algorithm. Then Chib & Greenberg5 gave some recent examples including relevant Markov chain theories which made more applications appeared in the recently literature. In the next section, we will provide a brief introduction to the MH-Algorithms and its work principles. We will also discuss the three different MH-Algorithms based on different sampling methods.

As for Stochastic Approximation in Monte Carlo Computation (SAMC), it was first introduced by Liang et al.6 As we know, the Metropolis-Hastings (MH) algorithm and the Gibbs sampler Geman7 are prone to get trapped into local energy minima in simulations from a system for which the energy landscape is rugged. In terms of physics, the negative of the logarithmic density/mass function is called the energy function of the system. To overcome this problem, many advanced Monte Carlo algorithms have been proposed, such as parallel tempering Geyer,8 Hukushima & Nemoto,9 simulated tempering Marinari & Parisi,10 Geyer & Thompson,11 evolutionary Monte Carlo Liang & Wong,12 dynamic weighting Wong & Liang,13 multicanonical sampling Berg & Neuhaus,14 1/k-ensemble sampling Hesselbo & Stinchcombe,15 the Wang-Landau algorithm Wang & Landau,16 equi-energy sampler Mitsutake et al.17 stochastic approximation Monte Carlo Liang et al.9 among others. In this paper, we adopt the SAMC algorithm to our problem, which has been proofed to be a sophisticated algorithm in both theory and applications. The basic idea of SAMC stems from the Wang-Landau algorithm and extends it to the continuum systems and it is designed to simulate data with a complex model structure.

The rest of the paper is organized as follows. In section 2, we will introduce three different sampling methods based on Metropolis Hastings Algorithms and SAMC algorithm for parameter estimations in the logistic regression. In section 3, we will give a simple simulation on the all of the methods and compare the results with the MLE method provided in R package. In section 4, we apply the proposed method to a Adult Intelligence data. In section 5, we conclude the paper with a brief discussion.

Metropolis hastings algorithms

The working principle of Markov Chain Monte Carlo methods is quite straight forward to describe. Given a target density, we build a Markov kernel with stationary distribution and then generate a Markov chain (

) using this kernel so that the limiting distribution of (

) is and integrals can be approximated according to the Ergodic Theorem. The difficulty should thus be in constructing a kernel that is associated with an arbitrary density. But, quite miraculously, there exist methods for deriving such kernels that are universal in that they are theoretically valid for any density

.

The Metropolis Hastings algorithm is an example of those methods. Given the target density, it is associated with a working conditional density

that, in practice, is easy to simulate. In addition, can be almost arbitrary in that the only theoretical requirements are that the ratio

is known up to a constant independent of and that has enough dispersion to lead to an exploration of the entire support of

. For every given , we can then construct a Metropolis Hastings kernel such that is its stationary distribution. Here is the general step of the Metropolis Hastings algorithm.

Algorithm (Metropolis Hastings)

- Initialize

.

- Given current value of

, generate a candidate

.

- Calculate acceptance probability

.

- Take

with probability

and

otherwise.

- Iterate between step (ii) and step (iv) until converge.

In this paper, we consider the logistic regression. We want to use Metropolis Hastings algorithm to fit the parameter of the model. i.e

and

in the following function.

(1)

And the likelihood function is

(2)

(3)

Consider the prior distribution of

and

as the independent normal distribution

(4)

Then the posterior distribution is

And we need to get the Markov sequence of the parameters. We propose to consider three sampling methods based on the general Metropolis Hastings algorithm, which is independent sampling, dependent sampling and individual sampling

Independent sampling

Assuming that the parameters

and

are independent, we can generate

from the proposal distribution

. Then the independent sampling can be stated as following.

Algorithm (Independent sampling)

- Initialize

.

- Generate from the proposal distribution

.

- Calculate acceptance probability

-

- We accept

with probability

or

otherwise.

- Iterate between step (ii) and step (iv) until converge.

Dependent sampling

If the parameters are not independent, the convergence of stochastic sequence may be less efficient. So we consider the multinomial prior for

. In this case, we use the Fisher information matrix

to build the proposal distribution.

(5)

Where

is a regulation parameter, which can be adjust to reach the target acceptance rate. Based on the likelihood function, we get the information matrix

(6)

Where

is the prior covariance matrix of

,

,

is a

matrix.

Then we give the algorithm for this method:

Algorithm (Dependent Sampling)

- Initialize

.

- Calculate Fisher Information matrix

-

-

- Generate from the proposal distribution

.

- Calculate acceptance probability

-

- We accept with probability

or

otherwise.

- Iterate between step (ii) and step (v) until converge.

Individual sampling

In the Dependent Sampling method, we need to adjust the regulation parameter

that make to reach the target acceptance rate. In order to avoid this process, we propose another MH sampling algorithm, i.e. Individual Sampling. For each parameter, we generate it individually from the normal proposal distribution. We only give the algorithm in two parameters condition, since when the dimension is too large and we need to calculate acceptance rate for each parameter, which is too time-consuming.

Algorithm (Individual Sampling)

- Initialize

.

- Generate from the proposal distribution

.

- Set

and calculate acceptance rate

-

- We accept

with probability

or

otherwise.

- Generate from the proposal distribution

.

- Set

and calculate acceptance rate

-

- We accept

with probability

or

otherwise.

- Iterate between step (ii) and step (vii) until converge.

Stochastic approximation Monte Carlo computation (SAMC)

Aforementioned, we introduce three MH algorithms for learning the Logistics regression. In this part, we will illustrate the Stochastic Approximation Monte Carlo Computation (SAMC) to deal with this problem.

Consider the sampling distribution that

, where c is a constant, x is generated from the sample space. We let

donate disjoint regions that from a partition of

, which can be partitioned according to any function of x such as the energy function

as follows:

. Then we let donate the estimate of and rewritten the invariant distribution in the generalized Wang-Landau (GWL) algorithm as

(7)

For theoretical simplicity, we assume that for all t, where is a compact set. Let be an m-vector with and , which defines the desired sampling frequency for each of the sub regions. Henceforth, is called the desired sampling distribution. Let be a positive, no decreasing sequence satisfying

(8)

For some. For example, in this article we set

(9)

For some specified value of .

In the logistic regression model, the invariant distribution

and the proposal distribution

. With the foregoing notation, one iteration of SAMC can be described as follows:

Algorithm (SAMC)

Generate a sample

by a single MH update with the target distribution given by

Generateaccording to the proposal distribution

. If

, then update to, where denote the collection of indices of the sub regions from which a sample has been proposed and denote the index of the sub region of sample.

Calculate the ratio

Accept the proposal with probability. If accepted

, set, otherwise, set

.

For all, update to as follows:

where

if

, and 0 otherwise.

In this part, we generate simple logistics regression data and use the Monte Carlo method to fit the model.

(10)

Based on the model and given the value of and, we generate 1000 samples of.

(11)

Then is generated from binomial distribution. We do iterations for 1000 times and calculate the mean and variance of the

in different methods (Table 1).

Based on the Table 1, we find that both MH algorithms and SAMC have good performance on calculating the parameters in logistics regression while SAMC has smallest variance which indicates a better convergence rate and robustness. Since the independence of also the is close to 0, all the three MH algorithms perform similarly. As for SAMC algorithm, we have to partition the sample space based on the energy function. The energy regions can set up the initial values spanned in the full model space and therefore can have faster convergence rate and lower variance than other MH algorithm. Finally, we use the MLE method, i.e the ’glm’ function in R to estimate the parameters for comparison. It shows that SAMC algorithm even achieve better performance than the MLE method in terms of the variance of the estimators. Therefore, both MH and SAMC algorithms are eligible to obtain the consistent estimators in the logistic regression but even for this simple problem, SAMC still can enjoy better performance than others.

|

(0.1,0.2) |

(0.6,0.3) |

(1,-3) |

(2,0.4) |

(-3,2) |

Independent |

mean |

(0.10,0.17) |

(0.56,0.27) |

(1.22,-3.01) |

(1.78,0.35) |

(-3.20,1.92) |

variance |

0.09,0.07 |

0.11,0.08 |

0.15,0.23 |

0.13,0.10 |

0.25,0.22 |

Dependent |

mean |

(0.10,0.18) |

(0.59,0.25) |

(1.12,-2.87) |

(2.11,0.38) |

(-3.13,2.05) |

variance |

0.09,0.07 |

0.09,0.08 |

0.15,0.23 |

0.13,0.10 |

0.25,0.22 |

Individual |

mean |

(0.10,0.17) |

(0.57,0.26) |

(1.12,-2.88) |

(2.13,0.39) |

(-2.98,2.08) |

variance |

0.09,0.06 |

0.11,0.08 |

0.15,0.23 |

0.13,0.10 |

0.25,0.22 |

SAMC |

mean |

(0.09,0.19) |

(0.62,0.27) |

(1.09,-2.99) |

(2.11,0.43) |

(-3.19,2.01) |

variance |

0.06,0.05 |

0.11,0.07 |

0.11,0.11 |

0.09,0.10 |

0.24,0.20 |

MLE |

mean |

(0.10,0.19) |

(0.59,0.3) |

(1.01,-2.97) |

(2.01,0.40) |

(-3.01,2.01) |

variance |

0.09,0.07 |

0.21,0.16 |

0.19,0.27 |

0.24,0.22 |

0.16,0.24 |

Table 1 Comparison of parameter estimations and variances for 5 pairs of

In this part, we apply Monte Carlo methods into a real example data set. Our dataset is obtianed from the Wechsler Adult Intelligence Scale (WAIS), which is a test designed to measure intelligence in adults and older adolescents and it is currently in its fourth edition (WAIS-IV). The original WAIS was published in February 1955 by David Wechsler, as a revision of the Wechsler-Bellevue Intelligence Scale that had been released in 1939. The propose of this test is to find the relationship between intelligence and Senile Dementia among older people. In our score scale, people (with and without Senile Dementia) were asked to do some tasks individually. When all tasks completed, they will get a score, ranging from 0-20. Therefore, the response is a binary response that reflects whether people have Senile Dementia. The WAIS score is the explanation variables. Based on data, we need to build the logistic model and estimate the parameters.

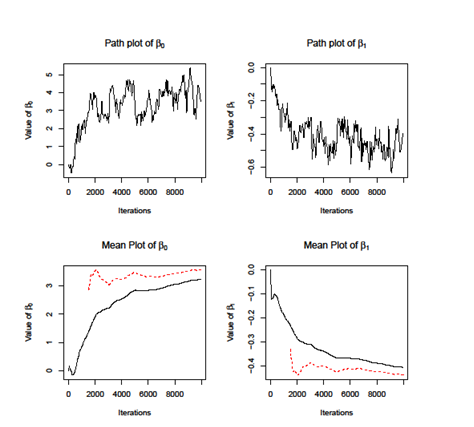

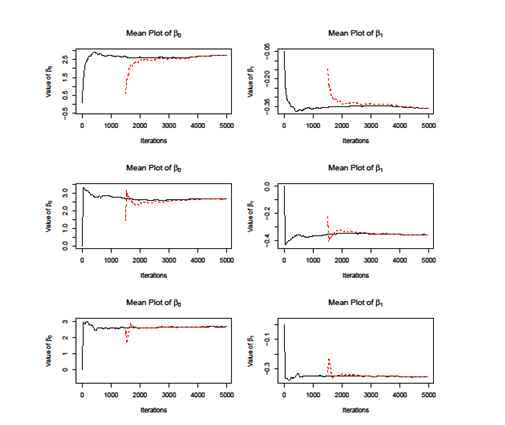

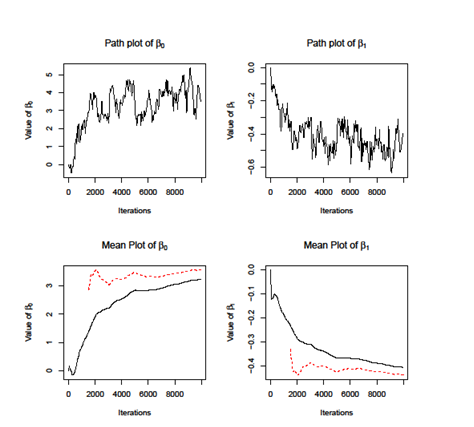

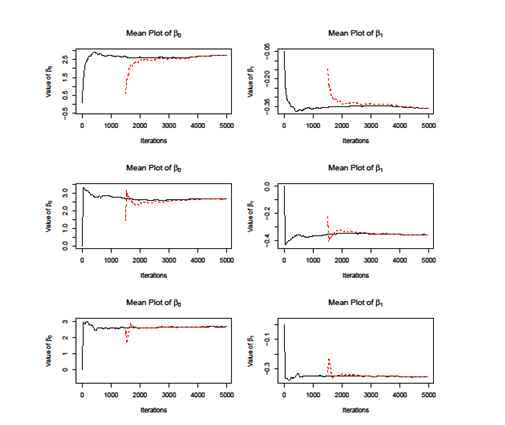

Firstly, we apply the MH Independent Sampling Algorithm. We set the length of chain equals to 10000 and get the plot of parameters of, . As showed in the lower two figures of Figure 1, the two lines does not coincide, which indicate that independent sampling algorithm does not converge. The independent sampling algorithm assumes that parameters are independent; however, it cannot be easily satisfied in the real application. We calculate the covariance of and, which is . Therefore, the independent sampling method cannot be applied here. Then we use another two MH algorithms, i.e dependent sampling and individual sampling as well as SAMC algorithm. We set the length of chain equals to 5000 and plot the cumulative mean curves for each method. As showed in Figure 2, all the methods converge when iteration grows while the SAMC converges faster than other, i.e; the two curves for SAMC coincide at around iteration 2000 while others need more iteration. To access the accuracy of estimations of and, we use the results from MLE method as benchmark as compare them with the ones from the Monte Carlo methods. As showed in Table 2, the results from SAMC are more close to the MLE and also it has the smallest variance among all other methods. According to the result, is significantly less than 0, which means people who get less score have higher risk of Senile Dementia.

Figure 1 The path and mean plots of , for MH Independent Sampling algorithm. The upper two plots are the trace plot of estimators of and at each iteration. The lower two plots are the cumulative mean of and , respectively. The solid line calculates the cumulative mean starting at iteration 1, while the dash lines starts at step 1500.

Figure 2 Comparison the cumulative mean plot of and for multiple Monte Carlo algorithms. The upper two panels denote the MH Dependent Sampling method; the middle two panels denote the MH individual sampling method; the lower two panels denote the SAMC method. The solid line calculates the cumulative mean starting at iteration 1, while the dash lines starts at step 1500.

|

Independent |

Dependent |

Individual |

SAMC |

MLE |

mean |

(2.68,-0.34) |

(2.61,-0.34) |

(2.62,-0.35) |

(2.57,-0.34) |

(2.40,-0.32) |

variance |

0.19,0.18 |

1.27,0.24 |

1.23,0.10 |

1.13,0.09 |

1.19,0.11 |

Table 2 Results of both value and variance for each method of

In this paper, we have proposed three MH algorithms and also SAMC to estimate the parameters of logistic regression. According to the simulation study, when the parameters are uncorrelated, each method has good performance. When the parameters are highly correlated, Independent Sampling method is less efficient and even cannot converge. The results showed that SAMC algorithms can be used in both scenarios and always outperform others. Compared with other MH algorithm, SAMC need less iterations to converge and have smaller variance of estimators even compared with MLE method.

One interesting further work is to apply the Monte Carlo simulations to high-dimensional big data problem. The challenge comes from two aspects. First, the data contains complex structure and therefore, the acceptance rate for most MCMC algorithm can be slow and therefore can be less efficient. Although SAMC has been proved to be a good method for the complex data and can overcome some local trap problem, it still needs to improve to the high-dimensional case where the likelihood function may not be intractable. Secondly, the big data have catastrophically large volumes and most single chain Monte Carlo simulations cannot apply. At each iteration, it should go through all the data which need large memory space for the computer machine and long time to generate the data at each iteration. For the big volume of data, we should consider parallel computing strategies, i.e, generate multiple Monte Carlo chains in parallel and then integrate chains to get the single estimator (Liang and Wu, 2013). Also, the high speed graphics processing unit (GPU) can be helpful for accelerating the speed of many MCMC algorithms.

In conclusion, we introduced several Monte Carlo simulation methods to estimate parameters of generalized linear model, i.e logistic regression. This problem can also be achieved by the MLE method which might be easier to calculate. However, our Monte Carlo algorithm can obtain a chain of estimations instead of a single value and therefore can provide more information about the process of reaching the true values of parameters and can be useful for further analysis.