eISSN: 2378-315X

Research Article Volume 3 Issue 6

1Sanofi Genzyme, Cambridge, USA

2Lilly Research Laboratories, Eli Lilly and Company, USA

Correspondence: Xiaobi Huang, Sanofi Genzyme, Cambridge, MA, USA

Received: May 17, 2016 | Published: June 18, 2016

Citation: Huang X, Haoda F. A simulation study to decide the timing of an interim analysis in a bayesian adaptive dose-finding studies with delayed responses. Biom Biostat Int J. 2016;3(6):206-212. DOI: 10.15406/bbij.2016.03.00083

Bayesian adaptive designs have received considerable attention recently in dose-finding studies. They have been shown to be useful to improve trial efficiency. Among different dose-finding adaptive designs, due to the simplicity, the design with one interim analysis is one of the most popular adaptive designs in pharmaceutical research. For those one-interim dose-finding adaptive designs, it is important to decide when to conduct the interim analysis since an early adaptation may suffer from the lack of information while a later adaptation may cause loss of efficiency. In this paper, we conduct a simulation study to evaluate the optimal time of conducting interim analysis for one-interim dose-finding adaptive design with delayed responses, as well as to delineate the relationship between the timing of interim and the efficiency of the design.

Keywords: bayesian adaptive design,x dose-finding study, delayed-response

One major challenge in drug development is to find the right dose. This is particularly important to Phase II trials which need to identify the right dose for Phase III registration studies. Poor dose-selection is one of the key reasons accounting for 50% failure rate in the Phase III clinical studies.1 It often has several doses in Phase II trials and these trials are designed to establish a dose-response relationship, which ultimately leads to Phase III dose selection. It has been shown that traditional fixed designs are not efficien,2 where those designs are often equally allocating patients to different dose levels and waiting until the end of the trial to estimate the dose-response curve. During the past decade, Berry et al.3 have shown that adaptive designs can lead to a better estimate of the dose-response. In adaptive design settings, investigators can prespecify one or more interim times to look at the data earlier and to get an estimate of the dose-response curve based on interim data, then they can make adjustments to the design. Depending on the purpose of the trial, decisions such as stopping the trial early due to the futility due to futility or adjusting the allocation ratio in different arms can be made at interim time points. There are many operational aspects to consider when deciding how many interim analyses are needed. For example, some large trials are running in multiple countries with more than a hundred clinical sites. The sponsor often takes weeks to receive the interim data after the interim data cut-off date and it will take another couple of weeks to make decisions. The whole process takes tremendous amount of resource. In addition to these, regulatory agency often raises concerns for a study with too many interims which may introduce bias to the study. Therefore, although an adaptive design may have several interim analyses, the design with only one interim analysis is the most popular in pharmaceutical research. For these one-interim dose-finding adaptive designs, it is important to decide when to conduct the interim analysis since an early adaptation may suffer from the lack of information while a later adaptation may cause loss of efficiency.

There are many relevant references on adaptive design.3,4 Most of these methods only use the values from patients who have completed the study. As a consequence, these methods are very useful when patients can complete the study quickly and the treatment effects can quickly show up. Therefore, their data can then be used for adaptation. However, there are many studies with delayed responses. For example, treatments for obesity, depression, diabetes, etc. often take weeks to demonstrate clear treatment effects, and the treatment period for each patient lasts a few months. For these studies, at interim, most patients may not have the endpoint yet. For example, it is not unusual for an interim analysis in these types of studies to only have a small proportion (E.T., 20%) of patients who have completed the study. The situation creates new challenges and makes the decision making on interim timing even more difficult.

Fu & Manner5 developed an Integrated Two-component Prediction (ITP) model in the context of Bayesian adaptive design with delayed responses. In this paper, we extend their work to evaluate the optimal timing for one-interim dose-finding adaptive designs. We conduct simulation studies to evaluate the timing of interim analysis under different dosing regimens and recruiting rates. These results provide general recommendations about the timing of an interim analysis. In section 2, we provide a detailed review of the ITP model and the utility-based Bayesian adaptive design. In section 3, we illustrate our searching optimal interim timing procedure by Phase II dose-finding trials under three different dosing regimens. In addition, we discuss how the optimal timing changes with enrollment rate. In section 4, we discuss how our results extend previous Bayesian dose-finding adaptive design research and possible applications.

Fu and Manner5 proposed a Bayesian dose-finding adaptive design for delayed responses. The approach has two major steps. The first step is to develop a Bayesian integrated two-component prediction (ITP) model at the time of interim analysis to predict drug effect at each dose level after treatment completed. The ITP model allows predicting dose effect at study completion while subjects have not yet completed the study. The second step is to apply a utility-based criterion to decide which dose arms to keep and which to drop. We illustrate these methods in detail in section 2.1 and 2.2.

Integrated two-component prediction (ITP) model

In certain disease areas, like diabetes and obesity, drug effects can only be clearly observed after a period of time. Since subjects are often enrolled at different time, the whole study could take months and even more than years to complete. At the time of interim analysis, only few subjects may have completed the study. To use interim data to predict the drug effect at the end of the study so that all observed information can be utilized. The ITP model is develo

(1)

Where is an observation (change from baseline) from dose level i, subject j at time l. The model has two components. The first component is a mixed-effect analysis of variance (ANOVA) model, which describes predicted drug effect at study completion. Here, represents the predicted dose effect for dose level i at study completion ( ). Parameter is the random effect for dose and subject, which describes the between subject variability, while is the residual error, which describes the within subject variability. The second component depicts the time course of the treatment effect, where is the time covariate for dose level i, subject j at time l, d is the duration of the study. Apparently, . The shape parameter describes how drug effect change as time changes. The second component can be considered as time effect, which extrapolates the drug effect over the time course, thus enable the model to predict drug effect at study completion while the subject has not yet completed the study. Here, it is assumed that the coefficient of variation is constant over time. Shargel et al.6 suggested this is common in biological experiments.

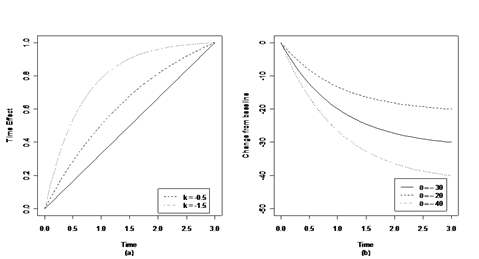

Figure 1(a) illustrates how the second component (time effect) in (1) extrapolates drug effect across time by a 3 months study ( ). Generally, parameter is less than 0, which suggests that the drug effect changes faster at earlier time than later time. This matches the law of diminishing returns of pharmacokinetics and pharmacodynamics6,7 The larger the absolute value of is, the faster the drug take effect at beginning. Figure 1(b) illustrates the drug effect across time. Parameter in the first component defines drug effect after 3 months of dosing. The second component extrapolates it over time. At 3 months, the drug effect reaches maximum.

Figure 1 Parameters in the ITP model. Parameter k describes the shape of the time effect; parameter

is the drug effect at the end of dosing (

).

Panel (a) illustrates the time effect and how it changes as k changes,

.

Panel (b) illustrates the drug effect over time. k is fixed at -1 and

The ITP model well catches the nonlinear relationship of drug effect over time. It also describes an increasing trend of the variance of the response over time, which is observed historically. As patients join study at different time points, the ITP model can predict the drug effect at dosing completion when subjects are still under investigation. Moreover, the model can also handle different types of missing data such as missing completely at random (MCAR) or missing at random (MAR) Little & Rubin.8

There are many priors we can choose. For example, we use a noninformative prior as

Where 1 represents a flat prior. Informative priors can also be used if there are reliable data to support.

The posterior distributions of parameters are estimated by Markov Chain Monte Carlo (MCMC) method.

Utility-based bayesian adaptive design

We are going to make decision based on the interim data. The posterior distribution from the ITP model can be obtained based on interim data I, where represents all the parameters of interests. For set of all possible decisions , such as dropping arms or change allocation ratio, we can calculate the posterior predictive distribution under each decision :

Then, we then make the choice of decision based on optimizing a utility function:

Take the same example studied in Fu and Manner (2010). In a dose-finding trial design, one plans to drop inefficacious dose arms after the interim analysis. At the beginning, there are 9 dose arms in the study, including 8 treatment arms and 1 placebo arm. After the interim analysis, we plan to drop 4 treatment arms. The goal of this study is to maximize our learning about ED90, the minimum dose to achieve 90% maximum efficacy, which can be estimated from an Emax model.6,7 At the interim, we have 70 possible decisions to choose 4 treatment arms out of 8. Thus we have 70 posterior predictive data denoted as . We then keep on enroll subjects for the 4 treatment arms until the total sample size is reached. The more precise in estimating ED90, the better our choice of treatment arms is. Thus a utility function can be defined as , where is the estimated ED90 from posterior predictive data . Since , the optimal decision is made by minimizing the variance of estimating ED90:

(2)

The possible decision set will be based on the goal of the study as well as simplicity and efficiency considerations. For example, to make it more flexible, we can vary our decision rules to allow choosing the optimal number of arms to keep. One can also define other utility functions due to different purposes.

The simulation in Fu & Manner5 showed that the Bayesian adaptive design using ITP model is more efficient than traditional design with equal number of subjects in each treatment arms. However, the performance of the ITP model also depends on the amount of information we have at the interim. If the interim analysis is conducted too early, we might not be able to have a precise prediction of the dose response. As a consequence, the decision might not be optimal. On the other hand, if the interim analysis is conducted too late, many patients have already been allocated to the inefficient treatment arms and the design is not efficient. In this section, we conduct a simulation study to assess the timing for the interim analysis. These simulations provide insight on the optimal interim timing and provide a general guidance.

Study design

Consider a Phase II Bayesian adaptive dose-finding clinical trial for a type II diabetes compound, which is a multicenter, randomized, double-blinded, parallel-group placebo controlled study. The objective is to understand the dose-response relationship.

There are four study periods for each patient. In period 1, subjects will go through a screening process from week -3 to week -2. Period 2 is a pretreatment washout period from week -2 to week 0. At week 0, subjects will be randomized to the placebo arm or one of the 8 treatment arms. In period 3, subjects will be administered with treatment or placebo from week 0 to week 12. Subjects will have biweekly visits in this period. Fasted blood glucose values will be obtained at each visit. In period 4, subjects will undergo a post-treatment washout period from week 12 to week 16.

The duration of the study for each patient is 19 weeks in total. There will be 7 measurements taken per patient including baseline. Initially, there are 8 treatment arms and a placebo arm with equal number of subjects allocated. An interim assessment is planned at some time during the study after which 4 treatment arms will be dropped and incoming subjects will be allocated to remaining arms.

Simulation plan

The study plan to have a sample size of 324 patients, among which 36 patients will be randomized to placebo arm and 288 patients will be randomized to treatments arms. Suppose the sponsor is able to control the enrollment speed by opening more sites. We evaluate the impact of the enrollment speed by 3 rates as: 18, 36 and 54 patients per month. Therefore, the duration of the whole study is determined by the enrollment rate. Please refer to Table 1 for the duration of a whole study under each enrollment rate.

Number of Sites |

Enrollment Rate |

Duration of Study |

1 |

18/Month |

21 Months |

2 |

36/Month |

12 Months |

3 |

54/Month |

9 Months |

Table 1 Duration of Entire Study under Different Enrollment Rate

Before the interim analysis, subjects are equally randomized to the placebo arm and 8 treatment arms. After the interim analysis, 4 treatment arms are dropped and rest of subjects enrolled will be randomized to the rest 4 treatment arms. The number of subjects on the placebo arm will not be changed and the target number is 36. For example, suppose the enrollment speed is 36 patients per month and the interim analysis takes place 3 months after the first patient’s first observation (FPFO). Therefore, 108 subjects are enrolled at the interim, with 12 subjects randomized to each of the 9 arms. After interim, another 216 subjects are randomized to the rest 5 arms, among which 24 subjects are randomized to the placebo arm and 48 subjects are randomized to each of the treatment arms. Thus at the end of the study, there are 12 patients on the dropped treatment arms, 60 subjects in kept treatment arms and 36 subjects in the placebo arm. Note that the sample size for the treatment arms varies by the timing of the interim analysis. If interim analysis is conducted earlier, there are fewer patients in the dropped arms and more patients in kept arms. Conversely if interim is conducted late, there are more patients in the dropped arms and fewer patients in the kept arms.

To assess when is appropriate for interim analysis, we simulate interim analyses under different timings and different enrollment rates. We start to evaluate the interim time with 2 months after FPFO to the time when there is equal number of subjects in each arm (equivalent with traditional design). We perform a simulation study by changing the interim analysis time for every half-month. The time points to be evaluated are listed in Table 2. There are 500 simulations under each situation.

Number of Sites |

Enrollment Rate |

Interim Time (Months after FPFO) |

1 |

18/Month |

2.0, 2.5, 3.0, 3.5, …,18 |

2 |

36/Month |

2.0, 2.5, 3.0, 3.5, …,9 |

3 |

54/Month |

2.0, 2.5, 3.0, 3.5, …, 6 |

Table 2 Time of Interim Analysis Evaluated in the Simulation

Under each simulation, at the interim analysis, we apply the ITP model with non informative priors to get posterior distribution of treatment effects and other parameters as between and within subject standard deviations . The posterior samples are obtained from an MCMC algorithm. There are 70 possible combinations to drop 4 treatment arms out of 8. Under each combination, we simulate 1000 posterior predictive samples of trial data using the parameters obtained from the ITP model. For each posterior predictive data set, we apply an Emax model to the last observation of each patient to estimate , the minimal dose to achieve 50% maximal drug effect. We then apply the utility-based method (Eq. (2)) to find the combination of treatment arms with smallest variance .

Below is the Emax model we use for this paper:

(3)

where is the response for subject j at dose i, is the placebo effect, is the maximal drug effect and is the minimal dose to achieve 50% maximal drug effect, and is the hill parameter to control the shape of the curve. The parameter of interest here is , since for a fixed , is proportional to . We can derive from and . Therefore, estimating is a crucial step to estimate and for dosing in Phase III.

After the interim analysis, we allocate the rest of the subjects to the placebo and the 4 treatment arms that we decide to keep as aforementioned. At the end of the study, we apply the same Emax model to the last observation of each subject to estimate for this simulation. We repeat the whole process for 500 times to get 500 estimates of . The loss is evaluated by the loss function:

In our simulation, we assume the true dose-response curve follows the aforementioned Emax model (Eq. (3)) with true . Between subjects variability accounts for 80% of the total variance, while within subjects variability accounts for 20%. Assuming the true total variance is 25, then . The time effect is assumed as based on previous experience. The missing data mechanism in this study is missing completely at random (MCAR) with 22.5% dropout rate. To simulate the missingness, we first select patients who drop out from a binary distribution with mean equal to 0.225. Then, we simulate the drop out time from a uniform distribution ranged from time 0 to12 weeks which is the duration of the treatment period. After a patient drops out, all of his future data are missing. Furthermore, we simulate under three dosing scenarios as follows:

Optimistic: 1mg, 5mg, 10mg, 20mg, 40mg, 80mg, 120mg, 200mg;

Neutral: 1mg, 8mg, 20mg, 75mg, 120mg, 200mg, 300mg, 500mg;

Pessimistic: 10mg, 30mg, 80mg, 160mg, 300mg, 500mg, 800mg, 1000mg.

The reasons to call them as optimistic, neutral and pessimistic are based on how confident out guess on the dose-response curve is before the study Fu & Manner.5

Simulation results

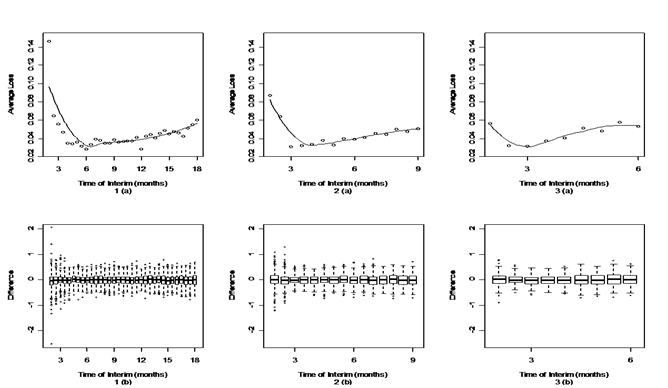

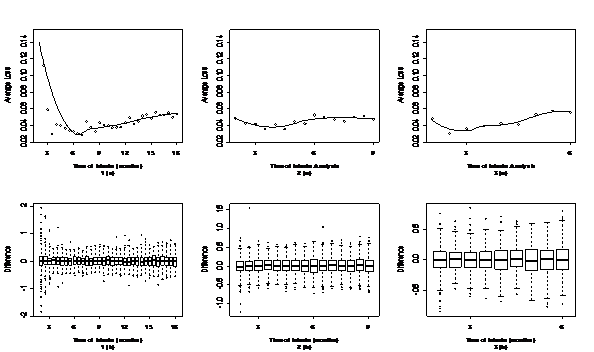

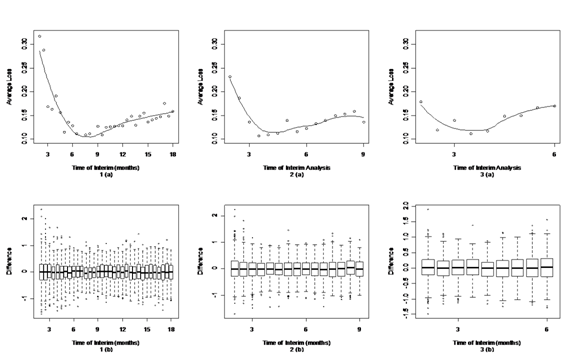

[Figure 2-4] present simulation results under optimistic, neutral and pessimistic dosing scenarios specifically. In each figure, the upper panels present the scatter plots of average losses over interim analysis time under three enrollment rates. A loess curve was also fitted to smooth average losses over time. The lower panels present the corresponding box plots to summarize differences between the true and estimated ED50 in the simulation. The narrower the range of the box plots, the smaller the losses. One can see from these figures that there's an apparent U-shape curve under every situation, which suggests that in general adaptive designs outperform traditional designs with equal sample size, if timing is appropriate. There is a sharp drop in average losses at the early months until the curves hit their pivotal points and a slow increase thereafter. This suggests that if the interim analysis is conducted too early, the average loss could be even higher than traditional design. As information accumulates to a certain point, conducting an interim analysis could largely decrease the average loss and improve the trial efficiency. If the interim is conducted later than the optimal time, the average loss increases slowly but gradually.

Figure 2 Timing for interim analysis for different enrollment rates under optimistic dosing scenario.

The upper panels (a) are the scatter plots and corresponding loess curve of average loss at different interim time.

The lower panels (b) are the box plots of difference between the true and estimated ED50.

Panels 1(a) and 1(b) are from an enrollment rate of 18 subjects per month.

Panels 2(a) and 2 (b) are from an enrollment rate of 36 subjects per month.

Panels 3(a) and 3(b) are from an enrollment rate of 54 subjects per month.

Figure 3 Timing for interim analysis for different enrollment rate under neutral dosing scenario.

The upper panels (a) are the scatter plots and corresponding loess curve of average loss at different interim time.

The lower panels (b) are the box plots of difference between the true and estimated ED50.

Panels 1(a) and 1(b) are from an enrollment rate of 18 subjects per month.

Panels 2(a) and 2(b) are from an enrollment rate of 36 subjects per month.

Panels 3(a) and 3(b) are from an enrollment rate of 54 subjects per month.

Figure 4 Timing for interim analysis for different enrollment rate under pessimistic dosing scenario.

The upper panels (a) are the scatter plots and corresponding loess curve of average loss at different interim time.

The lower panels (b) are the box plots of difference between the true and estimated ED50.

Panels 1(a) and 1(b) are from an enrollment rate of 18 subjects per month.

Panels 2(a) and 2(b) are from an enrollment rate of 36 subjects per month.

Panels 3(a) and 3(b) are from an enrollment rate of 54 subjects per month.

Dosing |

Enrollment |

Optimal Interim Time (Month) |

N1† (Per Arm) |

N2‡ (Total) |

No. of Subj. Completed Study↑ |

Minimum Average Loss |

Optimistic |

18 |

6.5 |

13 |

117 |

72 |

0.031 |

36 |

4 |

16 |

144 |

72 |

0.0325 |

|

54 |

3 |

18 |

162 |

54 |

0.0308 |

|

Neutral |

18 |

6.5 |

13 |

117 |

72 |

0.0286 |

36 |

4 |

16 |

144 |

72 |

0.038 |

|

54 |

3 |

18 |

162 |

54 |

0.0335 |

|

Pessimistic |

18 |

8 |

16 |

144 |

108 |

0.1051 |

36 |

4.5 |

18 |

162 |

72 |

0.1146 |

|

54 |

3.5 |

21 |

189 |

54 |

0.118 |

|

† N1 is the number of patients per arm in the interim analysis. |

||||||

‡ N2 is the total number of patients in the interim analysis. |

||||||

↑ is the number of patients who have completed the study at the time of interim analysis. |

||||||

Table 3 Optimal Time of Interim Analysis under Various Dosing Schemes and Enrollment Rate

Table 3 illustrates the optimal time for interim analysis under each situation given by the simulation. The optimal time and corresponding minimum average loss are based on the fitted loess values. We can conclude that with higher enrollment rate, the optimal interim time is also earlier. However, there are more subjects in the interim analysis at the optimal time compared to slower enrollment. The reason is that with quick enrollment, we tend to have fewer visits for subjects under investigation at the interim, thus we need more subjects to compensate for that information. These trends are similar for all dosing scenarios. Also, with slower enrollment rate, subjects in the interim analysis have had more visits, which enable the ITP model to give more precise predictions. While the enrollment rate is 18 patients per month, the design has the smallest minimum average loss. This is because the interim time is more than 6 months after the beginning of the trial and more than 70% – 80% patients have already completed at least their fifth visit.

Comparing enrolling 36 patients per month to 54 patients per month, the minimum average loss is higher for optimistic and neutral dosing scenarios but smaller for pessimistic scenario. This is because the interim time under these two enrollment rates is similar (only one month later for enrolling 36 per month than 54 per month), thus patients have similar numbers of visits in the interim analysis. Since there are more subjects with quick enrollment, the average loss can be smaller or similar comparing these two enrollment rates.

Compare between three dosing scenarios, the average loss between optimistic and neutral dosing are similar and much smaller than pessimistic dosing. This means there is more uncertainty while evaluating dose response relationship for pessimistic dosing. Thus, the "information collecting" period is longer under pessimistic dosing, which leads to a slightly later interim time.

In this paper, we conducted a simulation study to assess the optimal time to perform interim analysis for delayed-response Bayesian adaptive design.

Our study confirmed that Bayesian adaptive design is superior to traditional fixed design. The optimal timing of the interim analysis is an optimal allocation of information we are collecting before and after the interim in order to optimize our learning for the dose-response curve. Before the interim analysis, it is very important to guarantee sufficient information so that our model can accurately extrapolate and predict the dose effect that we have not observed. The information depends on how many subjects there are at the interim as well as how many visits these subjects have already completed at the interim. We can see from our simulation that in general, interim analyses should wait until a portion of subjects (around 15%-30%) have completed the study. If there is more uncertainty in estimating the dose response curve, the interim time should be postponed until enough information is collected. If the interim is conducted too early, it is not able to provide accurate predictions and incorrect decisions on dose selection can be made. In that case, the Bayesian adaptive design could even be inferior to the traditional design. After the interim analysis, we need to focus our information collecting on the doses which are more informative for the dose-response curve, which are the kept dose arms. If we perform the interim analysis too late, more subjects are allocated to the non-informative treatment arms, thus not be able to achieve best efficiency. In practice, it is important to perform such simulation to plan the proper time for interim analysis.

In addition, the prediction based on interim depends on how many visits that patients have had at the interim analysis. Under slow enrollment, more patients will have completed more visits at the interim thus it could be more efficient compared with quick enrollments. However, we should also consider the length of the trial and the progress of the whole project while deciding the enrollment rate. However, we should also consider the length of the trial and the progress of the whole project while deciding the enrollment rate.

Furthermore, the ITP model, utility criteria and Emax model we use in this paper are just an example. We can extend similar simulations to other models according to the nature of the compound being studied.

None.

Author declares that there are no conflicts of interest.

©2016 Huang, et al. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.

2 7